GCR Policy Newsletter (20 February 2023)

Air safety, seaweed production and AI regulation

This twice-monthly newsletter highlights the latest research and news on global catastrophic risk. It looks at policy efforts around the world to reduce the risk and policy-relevant research from the field of studies.

Policy efforts on GCR

On 16 February, the US State Department released a Political Declaration on the Responsible Military Use of Artificial Intelligence and Autonomy:

“An increasing number of States are developing military AI capabilities, which may include using AI to enable autonomous systems. Military use of AI can and should be ethical, responsible, and enhance international security. Use of AI in armed conflict must be in accord with applicable international humanitarian law, including its fundamental principles. Military use of AI capabilities needs to be accountable, including through such use during military operations within a responsible human chain of command and control. A principled approach to the military use of AI should include careful consideration of risks and benefits, and it should also minimize unintended bias and accidents. States should take appropriate measures to ensure the responsible development, deployment, and use of their military AI capabilities, including those enabling autonomous systems.”

GCR in the media

“Why is there so little awareness of the potential consequences of the use of nuclear warheads? That’s the question at the core of new research published today by the University of Cambridge’s Centre for the Study of Existential Risk (CSER). It’s based on a survey last month of 3,000 people in the US and UK that was designed to discover how much is known about ‘nuclear winter’. It reveals a lack of awareness among US and UK populations of what a ‘nuclear winter’ would entail. Just 3.2% in the UK and 7.5% in the US said they had heard of ‘nuclear winter’ in contemporary media or culture.” No Sunny Days For A Decade, Extreme Cold And Starvation: ‘Nuclear Winter’ And The Urgent Need For Public Education (Forbes)

“The study, by the University of Otago and Adapt Research in New Zealand, looked at the impact of ‘a severe sun-reducing catastrophe’ such as a nuclear war, super volcano or asteroid strike on global agricultural systems. Researchers found Australia, New Zealand, Iceland, the Solomon Islands, and Vanuatu most capable of continuing to produce food despite the reduced sunlight and fall in temperatures - and help reboot a collapsed human civilisation.” Where you should head to survive an apocalyptic nuclear winter, according to scientists (Sky News)

Latest policy-relevant research

Combatting biological risks with air safety

Current standards around indoor air quality do not include airborne pathogen levels, and extending indoor air quality standards to include airborne pathogen levels could meaningfully reduce global catastrophic biorisk from pandemics, according to a report by researchers from 1Day Sooner and Rethink Priorities. The mass deployment of indoor air quality interventions - such as ventilation, filtration and ultraviolet germicidal irradiation - would reduce transmission of a measles-like pathogen by an estimated 68 per cent. This amounts to about one-third of the total effort needed to prevent a pandemic of any similarly transmissible pathogen and would serve as an important layer of biodefense. Bottlenecks inhibiting the mass deployment of these technologies include a lack of clear standards, cost of implementation, and difficulty changing regulation/public attitudes. (26 December 2022)

Policy comment: Governments can lead on air safety by improving standards, funding research and development, and upgrading government buildings. For example, in December 2022, the US administration announced its plan to improve indoor air quality as a tool for reducing the spread of airborne diseases. A key part of the program is using the Federal Buildings portfolio of around 1,500 facilities to upgrade standards, conduct research and build best practices. A government-led initiative also helps foster a marketplace for air quality systems, capabilities and expertise which could be deployed in the private sector.

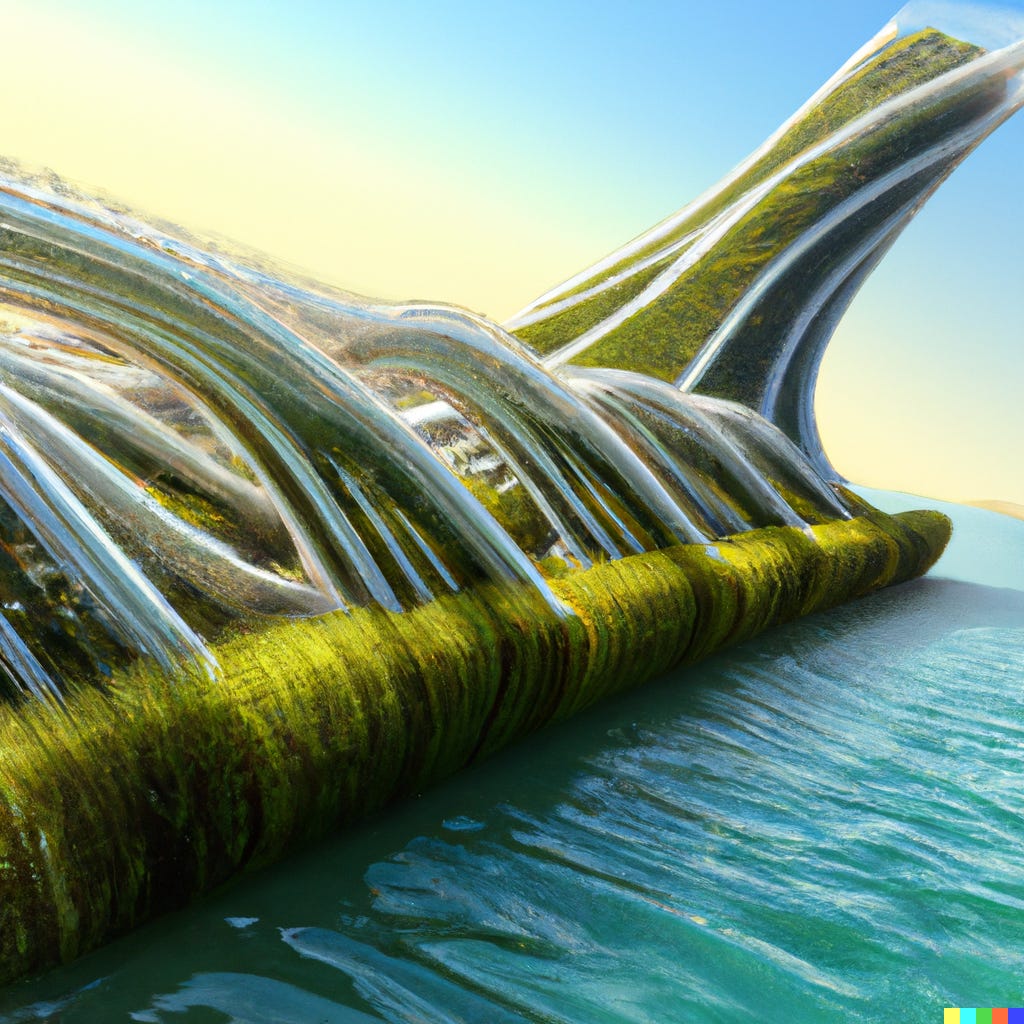

Scaling up seaweed production

Seaweed is a promising candidate as a resilient food source in the event of abrupt sunlight reduction scenarios, such as a nuclear winter, because it can grow quickly in a wide range of environmental conditions, according a report by ALLFED. Seaweed can be grown in tropical oceans, even after nuclear war. The growth is high enough to allow a scale up to an equivalent of 70 per cent of the global human caloric demand in around 7 to 16 months, while only using a small fraction of the global ocean area. Although a pure seaweed diet is not possible, this intervention would have an expected value of averting approximately 1.2 billion deaths from starvation. (30 January 2023)

Policy comment: Governments must prepare for catastrophes by building up the plans, infrastructure, logistics, capabilities and expertise needed for a massive scale-up of resilient food production. However, policy advocates may struggle to convince policy-makers to take action based on preparation for abrupt sunlight reduction scenarios. Advocates could focus on framing policies for scaling up seaweed production based on the current benefits, such as for food security, remediation of coastal areas and climate change. In the case of climate change, for example, seaweed aquaculture could provide sufficient sequestration of carbon dioxide for several climate change mitigation scenarios.

Aligning AI safety across jurisdictions

AI alignment is made up of value alignment problems at four levels - individual, organizational, national and global - according to a framework developed by New York University researchers. By aligning these and considering the ways in which they interact, advanced AI systems can be built to be compatible with the world, or at least be more aware of the ways in which they can potentially cause conflict. Proposed solutions which do not consider how the individual, organization, national and global levels integrate will be flawed. (14 December 2022)

Policy comment: A key challenge for AI regulation is clearly defining how AI serves or impacts the national interest and translating that into incentives and restrictions for AI organisations and developers, who typically sit in the private sector. Countries that have little input or authority over AI development might need to appeal to global rules and norms to shape the behaviour of other countries and their organizations. In this case, national governments might have very limited ability to shape the impact of AI on their nation’s citizens.